|

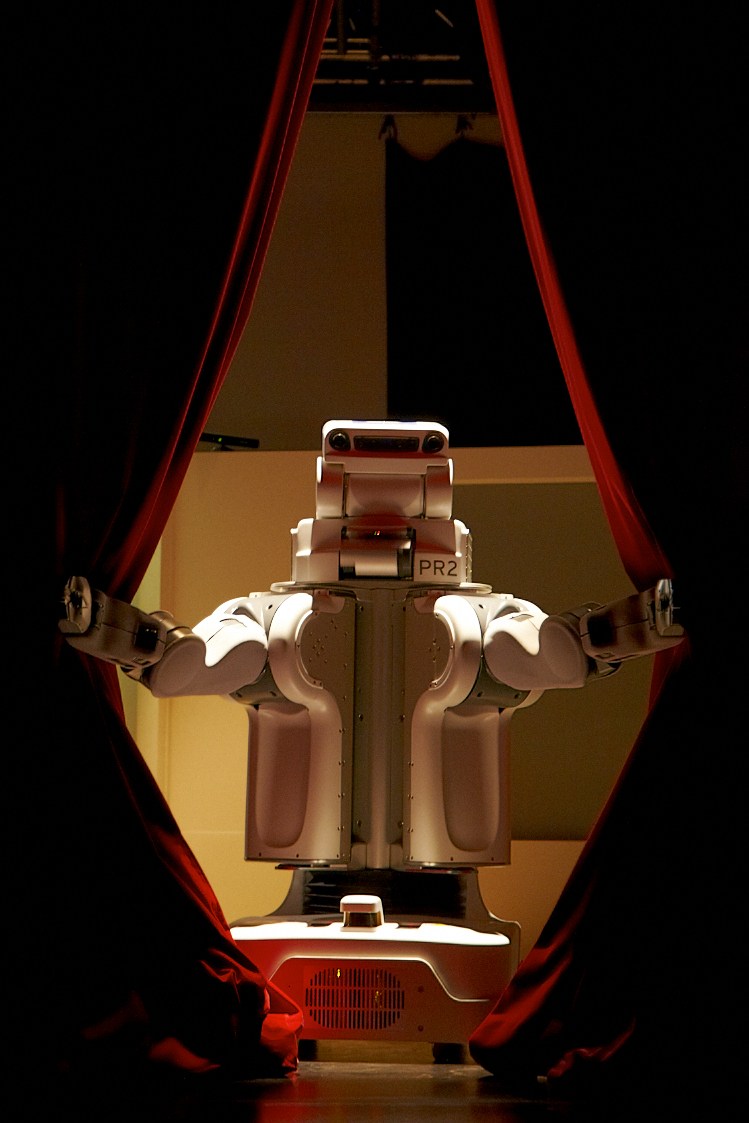

Roboscopie, the robot takes the stage! |

Contents

Watch it!

Short version (3 min) |

Full-length version (18 min) |

The storyline

Xavier and PR2, its robotic companion, try to find ways to understand each other.

Xavier and PR2 share a white, almost empty, stage. To get the robot to see his world, Xavier must keep being recognized by the human tracking module that lies on the wall, and must stick everywhere 2D barcodes, instead of the real objects. The robot can read and identify these barcodes, and while the stage gets covered by the tags, the robot constructs for itself a 3D world with the right-looking objects: a phone, a lamp, a hanger...

While Xavier is drawing by hand more and more of these 2D tags, the robot tries to offer its help. It brings first a bottle of water, then a fan... which blows away all Xavier's code. Angry, Xavier leaves, and PR2 remains alone.

The night comes, and the robot decides to explore the stage, scattered with those barcodes on the ground.

On the next morning, Xavier enters, and as soon as he gets recognized by the tracking system, he discovers that the robot's 3D model is a mess, full of random objects: an elephant, a boat, a van... Xavier resets the robot model with a special black tag, and starts to tidy up the place.

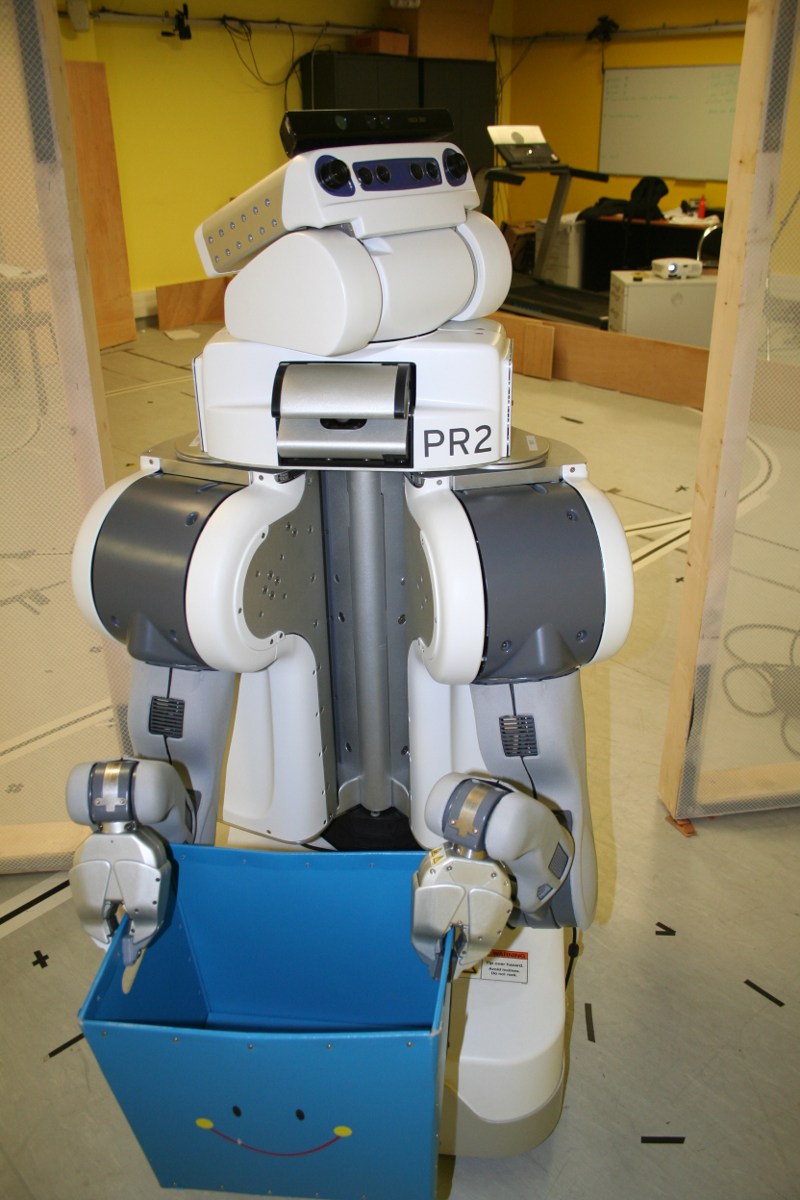

The robot decides to help, fetchs a trashbin, but starts to behave strangely (or is it playing?) and both Xavier and PR2 start a clumsy basket ball game with paper balls.

The robot suddently gives up and a new program slowly starts: a home-training session. Xavier seems to be expecting it, switches his tshirt, and starts the exercices. But as the program goes along, the robot looks more and more menacing, up to the point that Xavier shouts "Stop!".

Xavier shows one after the other the objects -- actually, the barcodes of the objects -- to the robot, explaining there are all fake, and one after the other, the robot connects in its mind the objects to the idea of being fake. And like the robot, we realize that everything was just an experiment.

Download the original script (in French!)

Making-of

On the technical side

The PR2 robot was running softwares developped at the LAAS/CNRS. While the performance tries to picture some of the challenges in the human-robot interaction field that are studied at the LAAS/CNRS, including the needed autonomy of a robot working with humans, the robot was partialy pre-programmed for this theater performance.

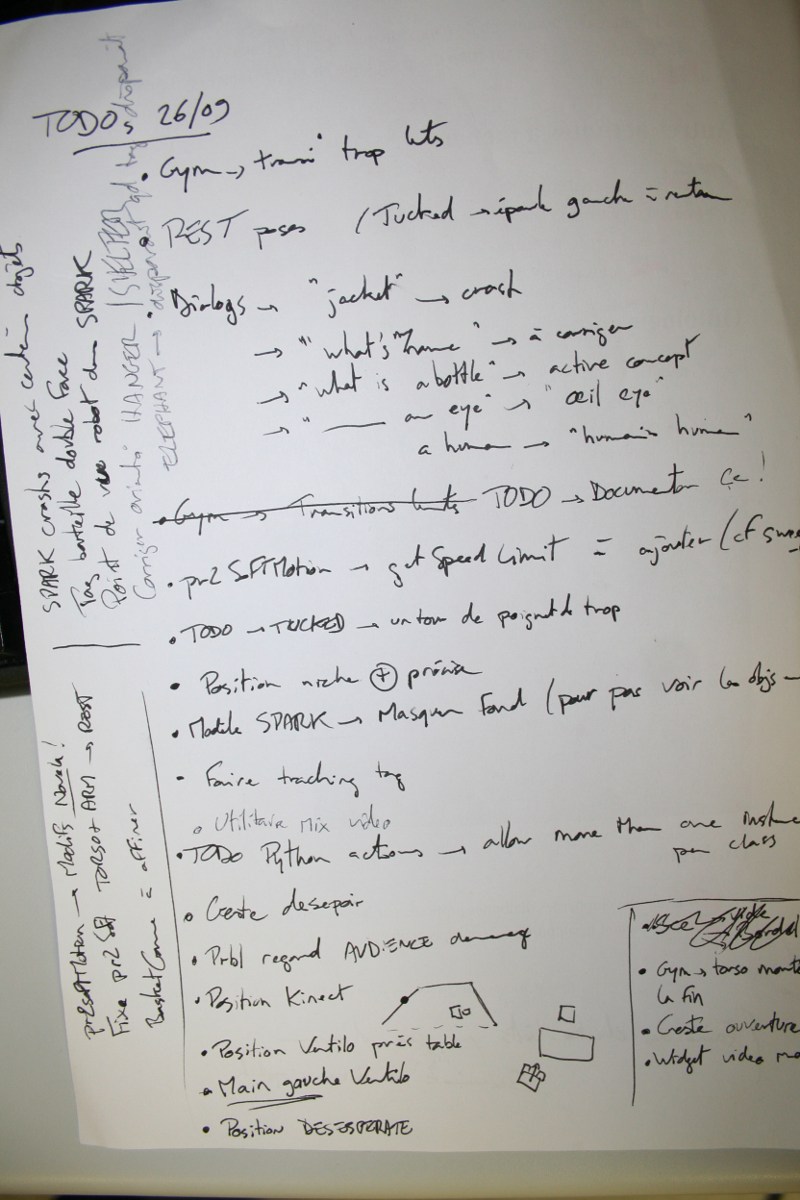

Most of the behaviours were written in Python, relying both on the PR2 ROS middleware and the LAAS's Genom+Pocolibs modules.

What was pre-programmed?

- While the real perception routines were running (see below), the robot did not have any synchronization methods with the human during the play: each sequence was manually started by one of the engineer.

Click here to view the performance's master script

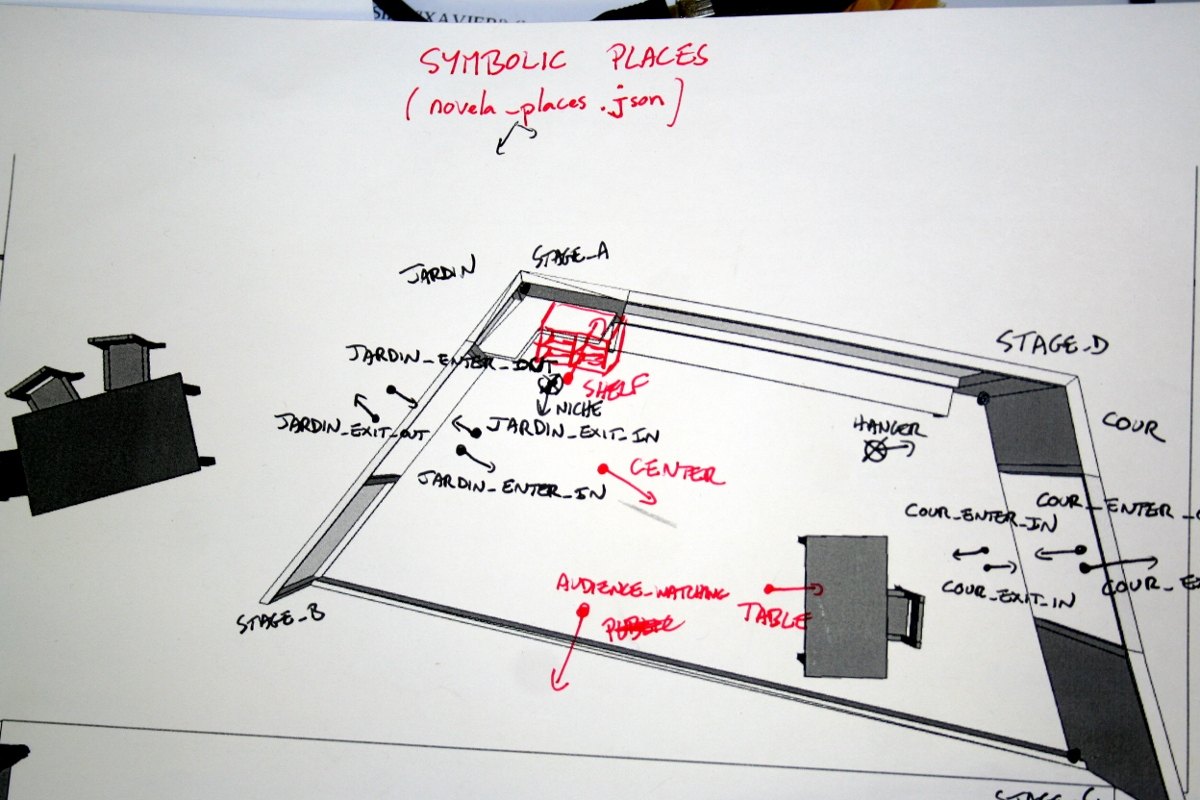

Places on the stage were hard-coded: for instance, the position of the table was known to the robot from the beginning, so was the position of the entrance door, etc.

|

- Manipulation tasks (like grasping the fan or the paper bin) were much simplified: the robot would simply open its gripper in front of itself, and wait for "something" to be detected in its hand. It would then simply close the gripper. Likewise, the robot special postures to enter or leave the stage with an object in hand (required to avoid collision with the door) were all pre-defined.

- At the end of the play, when Xavier talks to the robot ("Stop!", "Look at this phone!", "Everything is fake", etc.), sentences were manually typed in the system. we could have used speech recognition as we do in the lab, but converting speech to its textual version (that the robot can process) is relatively slow and error prone. So we decided to avoid it on the stage.

- While what Xavier said was successfully understood by the robot (cf below), the actions that followed (like looking at the phone, turning the head back to the audience,...) were manually triggered..

All sound effects were added in live during the performance. They were not tiggered by the robot itself. You can download here the original sound effects.

What was autonomously managed by the robot?

All navigation tasks were computed "in live" by PR2, using the ROS naviagtion stack. The main script just tell the robot to go from the engineer desk to the center of the stage for instance. The robot would then find a path that avoid obstacle.

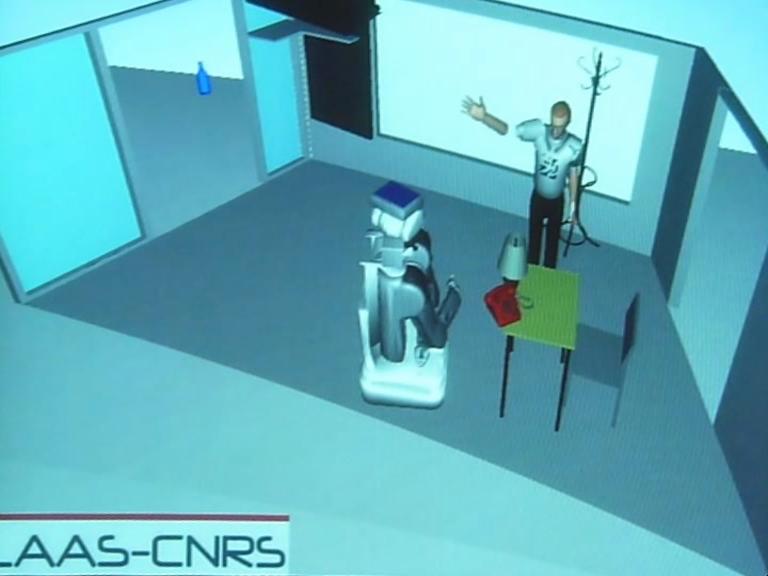

The 3D world that is displayed above the stage during the show is a live capture of the Move3D and SPARK softwares. These software are used daily on the robot to compute trajectories, symbolic locations, visibility and reachability of objects...

|

However, during the performance, we deactivated the computation of symbolic facts (like xavier looksAt jacket, RED_PHONE isOn table,...) which is not reliable enough for being used on the stage.

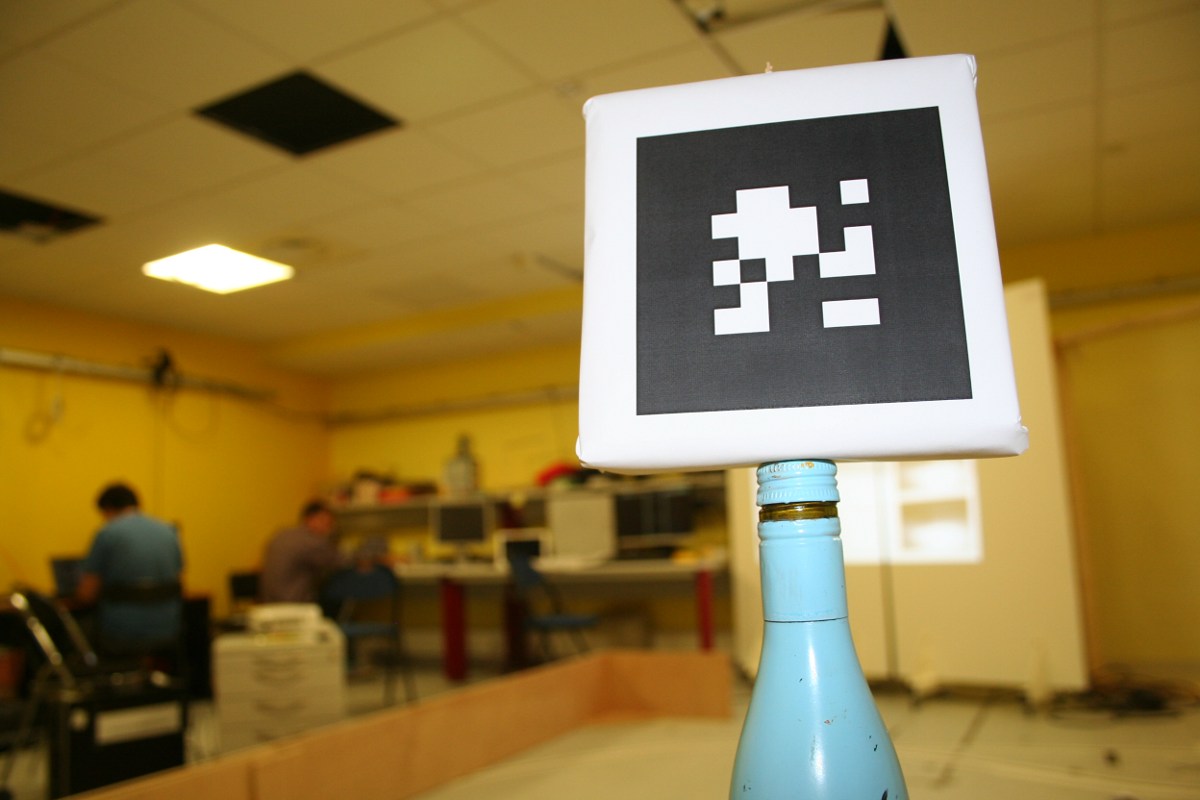

The 2D barcode are actually a key perception mechanism for our PR2. In the lab, all our objects have such 2D tags attached to them. They are used to identify and localize (both for the position and the orientation) objects surrounding the robot.

|

As it can be seen in the 3D model of the world perceived by the robot, PR2 knew where Xavier was and what was its posture. This is done using the Microsoft Kinect plus the OpenNI human tracker. In several occasion, the robot automatically tracks the human head or the human hands with this system.

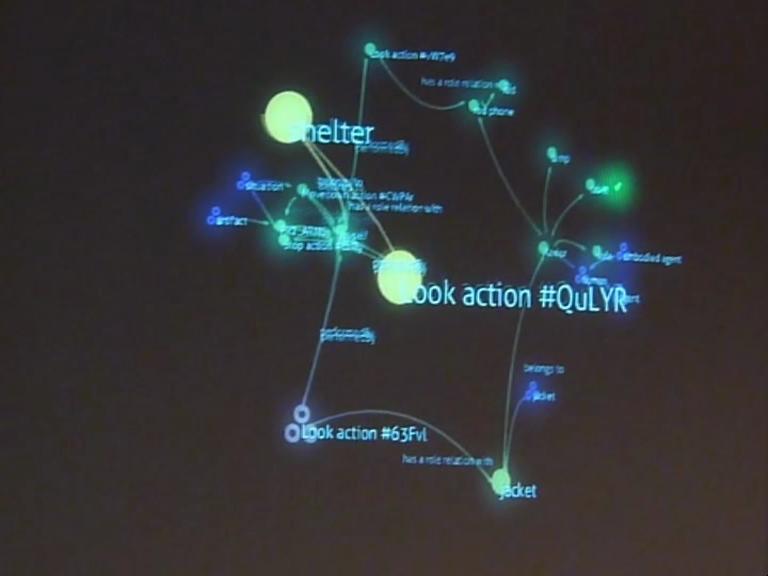

At the end, Xavier talks to the robot. The textual version of what he said was fed to the system "as it". Natural language understanding is done by the Dialogs module and use extensively the oro-server knowledge database to make sense of the word in the current context (you can have a look to the underlying model, presented as an ''OWL-DL ontology''. The result of the language processing was then added back to the knowledge base and automatically displayed by the oro-view OpenGL ontologies viewer.

|

Hence, the sentence <look at this phone> get translated into symbolic facts: [human desires action1, action1 type Look, action1 receivedBy RED_PHONE]. The robot is able to know that <this phone> is indeed the RED_PHONE by taking into account what the human focuses on.

Since the computation of symbolic facts was deactivated (cf above), we had to manually add several symbolic facts in a so-called scenario-specific ontology. You can see it online.

Press coverage

"PR2 takes the stage", Willow Garage blog

Scientific relevance

One of the main scientific challenge the LAAS/CNRS laboratory tries to tackle is autonomy: how to build a robot as autonomous as possible. Acting in a theatre play requires almost the opposite ability: actors are asked to closely follow the director artistic choices.

From a research point of view, automony It means that, as robotic scientists, we do not want our robot to be too much autonomous. to act in a theatre performance sim