|

Size: 2613

Comment: add H264 tag

|

Size: 2769

Comment:

|

| Deletions are marked like this. | Additions are marked like this. |

| Line 4: | Line 4: |

<!> '''Disclaimer:''' RT-SLAM is currently in development and has no official release. It is provided as-is, there is no standalone installation, and it is generally not advised (yet) to people who do not want to get their hands dirty. |

|

| Line 12: | Line 9: |

| * '''speed''': real time at 60 fps, VGA, gray level; | * '''speed''': real time at 60 fps (VGA, gray level) on a decent machine (at least one Core2 core at 2.2GHz) |

| Line 16: | Line 13: |

| * '''open source''': in C++ for Linux and MacOS | |

| Line 20: | Line 18: |

| * '''Prediction''': Constant velocity model; | * '''Prediction''': Constant velocity model, inertial sensor; |

| Line 37: | Line 35: |

| * [[http://homepages.laas.fr/croussil/videos/2011-06-09_rtslam-inertial-robot-grass.avi|Demo 3]] (v.a, 1'25, 27Mo, H264 AVI): IMU+camera on a rover robot on grass, with short term memory (lost landmarks are removed, no loop closure) * [[/Material|Additional videos referenced in papers]] |

|

| Line 41: | Line 41: |

| * Inertial Slam |

RT-SLAM

RT-SLAM stands for Real Time SLAM (Simultaneous Localization And Mapping).

Presentation

RT-SLAM is a fast Slam library and test framework based on EKF. Its main qualities are:

genericity: for sensor models, landmark types, landmark models, landmarks reparametrization, biases estimation;

speed: real time at 60 fps (VGA, gray level) on a decent machine (at least one Core2 core at 2.2GHz)

flexibility: different estimation/image processing sequencing strategies (active search), independent base brick for a hierarchical multimap and multirobots architecture;

robustness: near-optimal repartition of landmarks, data association errors detection (gating, ransac);

developer-friendly: visualization tools (2D and 3D), offline replay step by step, logs, simulation.

open source: in C++ for Linux and MacOS

For now it provides:

Landmarks: Anchored Homogeneous Points (Inverse Depth) that can be reparametrized into Euclidean Points;

Sensors: Pinhole cameras;

Prediction: Constant velocity model, inertial sensor;

Data association: Active search, 1-point Ransac, and mixed strategies.

Documentation

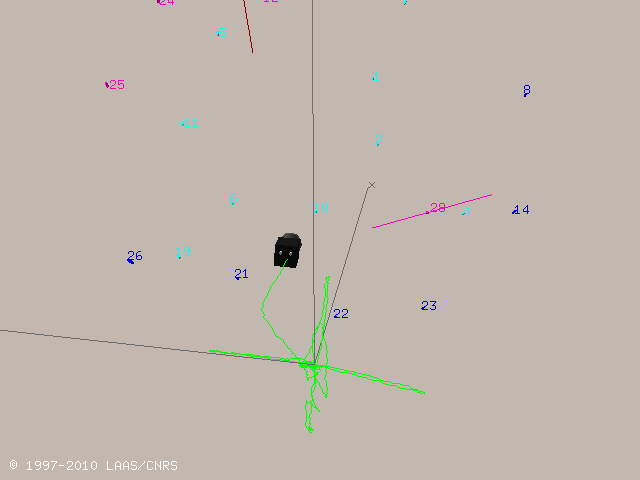

Screenshots and videos

|

|

Demo 1 (v.a, 1'33, 23Mo, H264 AVI): hand held alone camera at 60 fps indoor, with several loop closures.

Demo 2 (v.b, 1'06, 20Mo, H264 AVI): hand held IMU+camera at 50 fps indoor, with very high dynamic.

Demo 3 (v.a, 1'25, 27Mo, H264 AVI): IMU+camera on a rover robot on grass, with short term memory (lost landmarks are removed, no loop closure)

Roadmap

- Stabilize and make a release

- Odometry Slam

- Multimap Slam

- Segments Slam